Name: Ankit Pushpam

Profile: Data Engineer

Email: pushpam.ankit@gmail.com

Phone: (650)-229-3946

Skill

AWS Cloud 90%About me

A motivated data professional around 3 years as Data Engineer and 5 years as a Data Analyst, skilled in scalable data solutions, data modeling, and cloud technologies. Excels in cross-functional collaboration and problem-solving with a passion for leveraging data to solve complex business challenges.

Currently at Outcomes, I design and implement data solutions for data-driven decision-making. I build a robust ETL pipeline using AWS Glue, Python, PySpark, and Advanced SQL, ensuring data availability for analysis.

I was a Data Engineer at Capital One via Iquest Solutions, responsible for building robust data pipelines. I extracted customer account data from the data lake, transformed it as needed, and loaded it onto Snowflake data warehouse using technology like AWS, Python, Pyspark. As a Masters student at California State University, I worked on Big Data, NLP, and Time-series projects using Hadoop, Spark, and AWS. Previously, I worked at Micro Focus as a Business Planning Analyst.

Portfolio

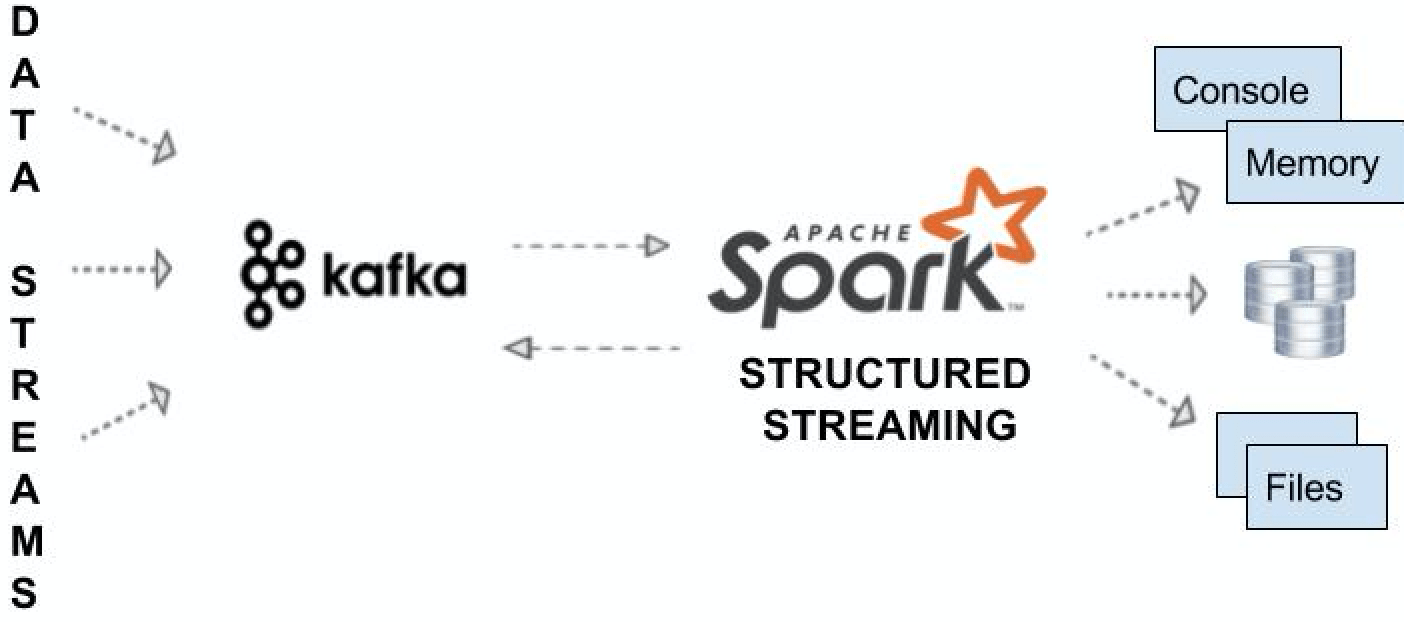

Explore my data engineering expertise in ETL pipelines, leveraging Airflow, AWS, Docker, Kafka, Python, and Spark, feeding data into AWS Redshift and Snowflake.

Skills

Explore the skills section for a breakdown of my technical proficiencies. This segment highlights the concrete abilities and knowledge I've cultivated throughout my journey, shaping my capacity to contribute effectively in diverse projects.

Language

Python

Pyspark

Java

Advanced SQL

Cloud, Big Data and Streaming

AWS Certified

Spark

Hadoop

Kafka

Kensis

Databases and Data Warehouse

PostgreSQL

MS SQL Server

AWS Redshift

Snowflake

Blogs

Welcome to my Data Engineering blog, where I share expertise in Python, Spark, Airflow, Kafka, Docker, Terraform, and AWS. Explore insights, tutorials, and discussions on building robust data pipelines.

AWS Cloud

Setting Up LocalStack and DynamoDB Tables with Docker and Terraform

This guide demonstrates setting up LocalStack and provisioning DynamoDB tables via Terraform for local development. Additionally, it explores visualizing and interacting with these tables using NoSQL Workbench, enhancing DynamoDB-dependent app development.

ETL

Mastering Serverless ETL: A Step-by-Step Guide with AWS Glue

this guide demonstrates the creation of a serverless ETL pipeline with AWS Glue, providing a foundational understanding of the process.

Python and SQL

Generating Mock Data for Complex Relational Tables

This article offers a practical solution to generate mock data for complex relational databases using Python, SQLite, SQLAlchemy, and Faker libraries.

Resume

Browse through my professional journey, accomplishments, and skills in the resume section. Here, you'll find a comprehensive overview of my experiences, education, and expertise that define my career path.